Model Context Protocol represents a “standardized communication protocol” enabling seamless integration between AI assistants and external data sources. It’s an open standard developed by Anthropic that defines how AI models, applications, and agents can “connect to external data sources, tools and systems” in a unified way.

MCP as a Universal Connector #

MCP acts as the bridge layer that unifies how AI interacts with everything around it — from files and databases to SaaS platforms and on-prem systems. Consider it like a USB device but for AI systems.

Before USB existed, every device had its own type of cable printers, cameras, phones all used different ports and drivers. It was messy, slow, and incompatible. Then came USB, which gave everyone a universal standard - one port that any device could use to communicate with your computer.

Without MCP, every AI system would need custom integrations for each of these - meaning separate authentication, schema handling, and message formats. MCP defines a common communication standard between AI models (clients or hosts) and external systems (servers).

MCP design benefits:

- Plug and play extensibility - Add and remove data sources or tools without changing the underlying model or host logic.

- Reduced complexity - MCP removes the burden of developing custom API for every system.

- Consistency - Every system shares the same protocol.

- Interoperability - Its vendor neutral and works across different AI systems that work with any MCP compatible service.

MCP vs Traditional APIs #

At first glance, one might thing MCP sounds like just another API, because end of the day both are ways for one system to talk to another. But here is the catch API’s connect applications, MCP is designed specifically to connect AI models (like LLM’s and other agents) to context and tools in a standarized, model-friendly way.

Usage differences:

- APIs enable software systems communication (backend ↔ frontend)

- MCP:

- Enables AI models and agents to connect to external data and tools in a standard way.

- It provides a framework for AI systems (like LLM’s) for reasoning through natural language and standard requests.

Standardization:

- Traditional APIs are service-specific with unique endpoints, request formats, and authentication

- MCP

- Consider a scenario where there are 10 different API’s with different endpoints, request format and response structures - Now Instead of training an AI agent to work with these 10 different API’s we can standardize this via MCP.

- Every MCP-compatible system exposes its resources and actions through a shared format (using objects like “resources”, “prompts”, “tools”).

- The AI model doesn’t need to learn each API - it just understands MCP’s schema and uses it everywhere.

Discovery and Context Awareness:

- APIs require knowing endpoints beforehand

- MCP allows dynamic discovery — an MCP client can query a server to learn.

- What tools it offers - mcp list-actions

- What resources are available - mcp list-resources

- What inputs each action requires - mcp describe-action <action_name>

- This helps AI agent to automatically explore and decide which tools to use for a given task.

Context Sharing:

- APIs return data

- MCP designs around context, helping AI models understand and retain relevant information across interactions

MCP Architecture and Core Components #

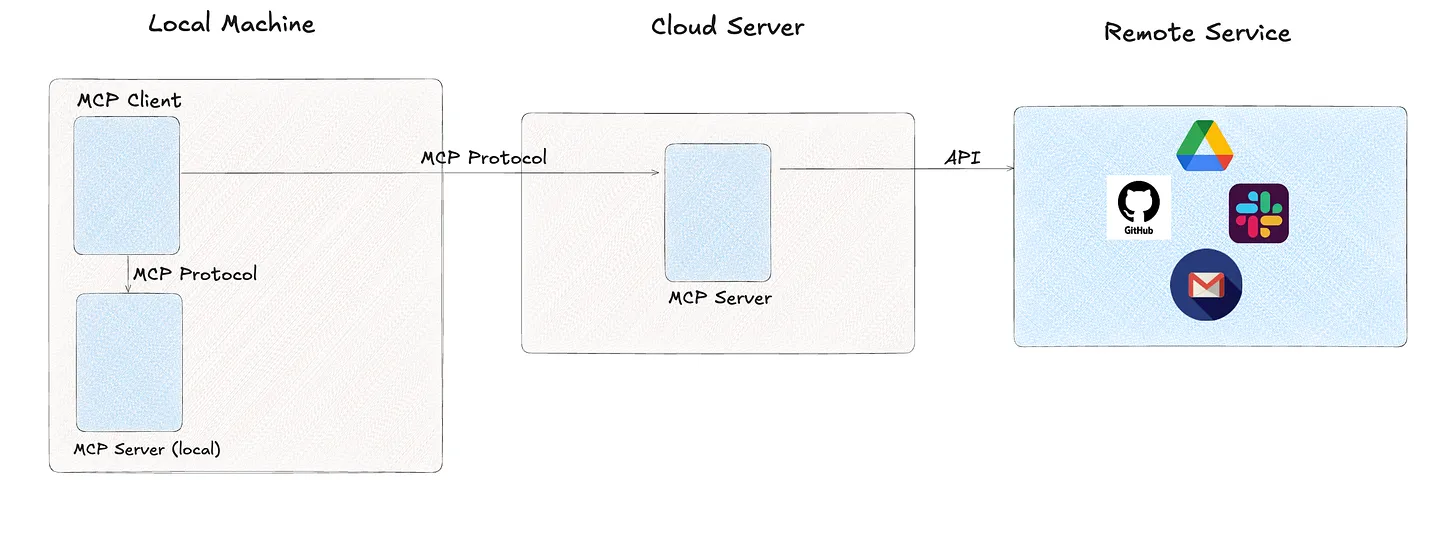

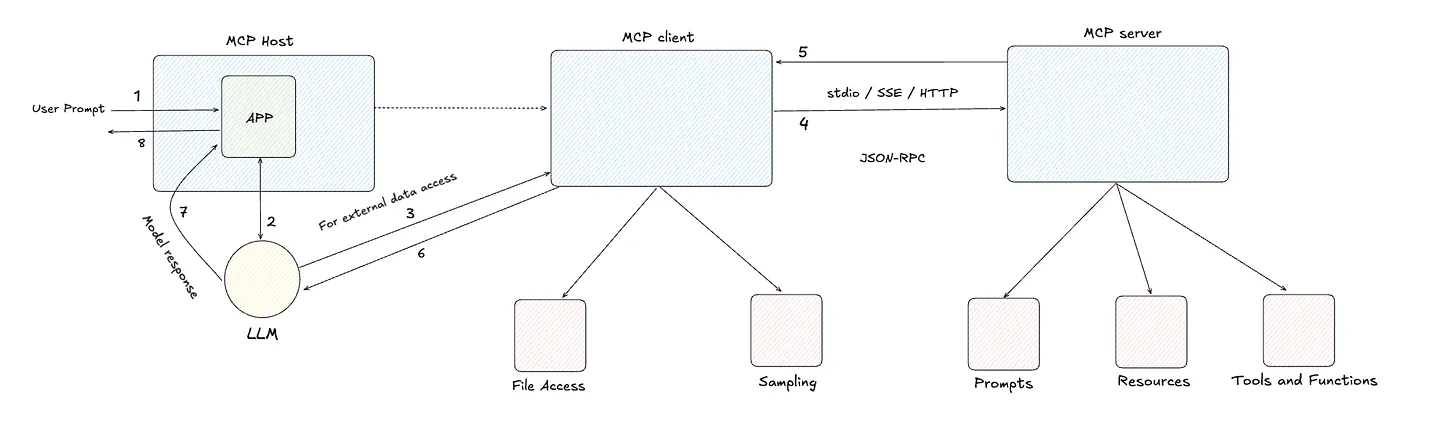

MCP follows a client-server architecture with three main components:

1. Host Application (MCP host)

This is the AI application or agent runtime that orchestrates the interaction between the model and external systems. Applications can be - a chatbot, coding assistant, AI agent. The host manages session lifecycle, model prompts, policies and can also run multiple MCP clients along with the application.

2. Client (MCP Client)

This the logical component that maintains a connection to one MCP server instance. It acts as a proxy between the host application and the MCP server (external tools).

3. Server (MCP Server)

This is the service that exposes resources, actions (tools), and prompts in the MCP schema. Servers implement MCP endpoints and can be anything that surfaces data or capability - example - Connectors like Github, Jira, slack, Database proxies etc.

Client and Server is 1:1 mapping - This means if an AI agent needs to connect to slack and database , then it spawns separate MCP client for each server.

Core Primitives #

- Resources - External data objects that server exposes (files, logs, databases, APIs). They are the fundamental units of data exchange.

- Prompts - Reusable templates that servers expose to guide AI assistants in specific workflow, to enhance their ability to interact with LLMs.

- Tools and Functions - These are executable functions that AI assistants can invoke to perform actions or retrieve dynamic information from external system.

MCP Protocol Layer #

MCP commonly uses JSON-RPC as message format - a structured json exchange between client and server. (for request, response and notification)

Supported Transports:

- WebSocket and HTTPS - This is used for persistent bidirectional communication (full duplex), or stateless invocations - Ideal for remote MCP servers (hosted on different infrastructure) with more complex networking.

- Stdio (standard input/output) - This is Local IPC, used when client and server run in the same host - Its Fast and simple to implement.

- SSE (Server-Sent Events) - This is unidirectional communication - best suited for real time updates over web connections. A compatible simple alternative for web socket streaming.

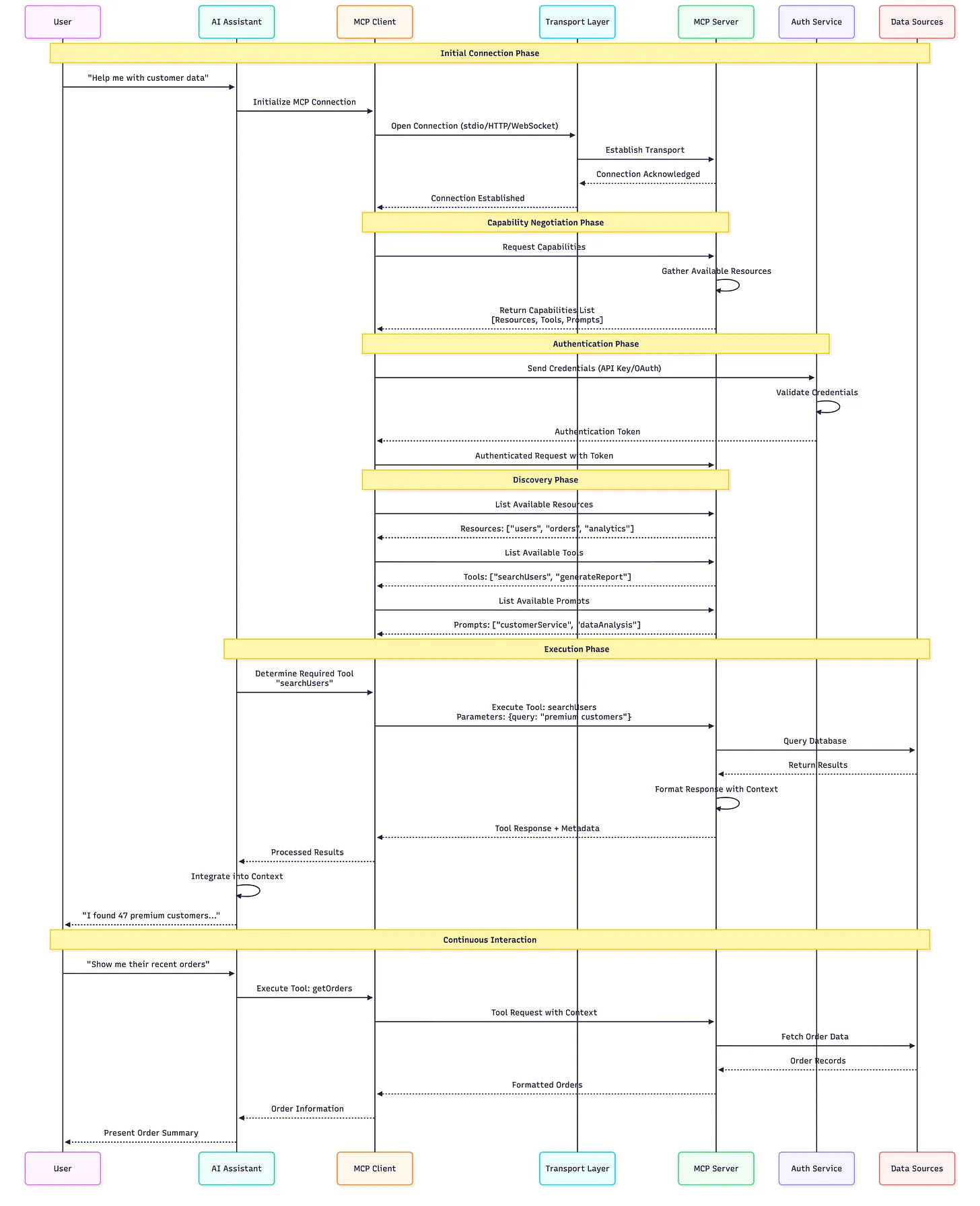

Communication Flow #

Below sequence diagram explains the MCP Flow for Order Summary Retrieval